Leveraging data in converting processes: Part 1

- Kevin Lifsey

- Mar 7, 2024

- 7 min read

By Steve Lange, managing member, ProcessDev LLC

Abstract

Increased availability of sensors and data provides new opportunities to improve quality and process reliability in converting processes. This three-part series covers the process of effectively leveraging data while avoiding pitfalls. Part I: Preparing for a Converting Process Data Project covers the necessary actions to ensure the right data are gathered in the right ways for the right reasons. Part II: Converting Process Data Wrangling describes the steps of aggregating, cleaning, and exploring data. Part III: Converting Process Data Analytics Implementation outlines analysis methods and tips for applying and preserving analysis insights.

Part I: Preparing for a Converting Process Data Project

The falling prices for sensors and data storage and wide availability of open source and commercial software are enabling increasing opportunities for leveraging web-converting process data [1,2]. a1,2. These technology components are necessary, but not sufficient, to ensure that a web-converting operation will be successful in leveraging their data to improve results. In Part I of this three-part series on leveraging data in converting processes, we will focus on preparing for the process of extracting business value from data.

Thinking critically first about who will make decisions based on the data insights, who will take actions based on those decisions, what information is truly needed for those people and which opportunities have the greatest value potential will help avoid expensive solutions that no one wants or will use (see Figure 1). Focusing on collecting the right data for the users and based on the process fundamental science will avoid sensor purchases that do not measure the right thing or at the necessary resolution or time frequency. Having data, but no one to aggregate, clean, visualize, or analyze it just creates data warehouses with untapped value, like dusty library stacks that no one visits. And those who analyze data, if they are not the users of the insights, must be able to present the insights in a way that users find helpful.

Focusing first on the “who” and “why”

One principle from Design Thinking of being empathetic to users of a product [3] t3 applies to planning what data are truly needed for the people in a converting operation. Who will be collecting the data, who will analyze the data, and most importantly, who will make decisions and act based on it? For example, the data that operators need to troubleshoot a converting process to keep it running and making top-quality product despite material variation and other “noise factors” will be different than the data a plant manager needs to keep her finger on the pulse of the entire operation or that a senior manager might need to supervise multiple manufacturing sites. The data the Maintenance Dept. needs are different than the Quality Assurance Dept. Or even if the data needs are not different, how the data insights should be communicated may be different…from an email or text message with prescribed actions to an elaborate dashboard or an automated process-control algorithm.

By partnering with the people where timely information can help the most and understanding why it would help, a vision of what a helpful solution would look like can emerge and direct the entire project to a useful outcome more likely to be maintained and improved over time. Knowing how they work, their daily problems, and how information delivered to them the right way can improve what they do will mean that they will use the data insights that are delivered. A data dashboard that includes too much information or of the wrong type might just be confusing and cause operators to ignore it. Alerting systems that often generate false alarms will similarly cause operators to ignore real problems because they cannot trust the information they are getting.

FIGURE 1. Key elements of preparing for a data project

But who to focus the data project on? Everyone has problems or opportunities where data analytics might help. An initial stage of surveying individuals or groups to assess where the greatest losses or potential gains exist might be needed. There may also be existing data that can be mined to analyze quality defects or complaints, machine downtime, or maintenance costs also to help direct who to engage with first. Getting information both from management and people on the manufacturing floor is critical to avoid misunderstandings about what is the real problem to be solved. Translating the losses into financial metrics can allow for comparison and enable management support for the project.

What to measure and why?

Knowing the end goal is not the same as knowing what specifically to measure, as some key performance indicators (KPIs) are not directly measurable and might require a “soft sensor” or intermediate measurement as a proxy. For example, while a web-wrinkle sensor might not be practical or even possible in every span of a web path, it might be practical to collect driven-roll speed and web-width data in critical spans as input to a predictive model for wrinkling and web lateral control.

FIGURE 2. Steps to focusing the data project

One tendency can be to think that “everything” must be measured because it might not be known what is critical about the process (see Figure 2). Following that path will overcomplicate the data model and increase system expense before the value has been proven. It is far more likely to be manageable to study the science and engineering fundamentals of a few unit operations, determine the most likely factors that control the process, and instrument the process based on the model. Success of smaller projects can allow for additional sensors and data to be installed later, if some upfront thinking on scalability goes into the data-architecture and data-model planning. Literature searches of technical publications and patents can provide insights into the major process factors in web converting processes when data collection will yield the best predictions.

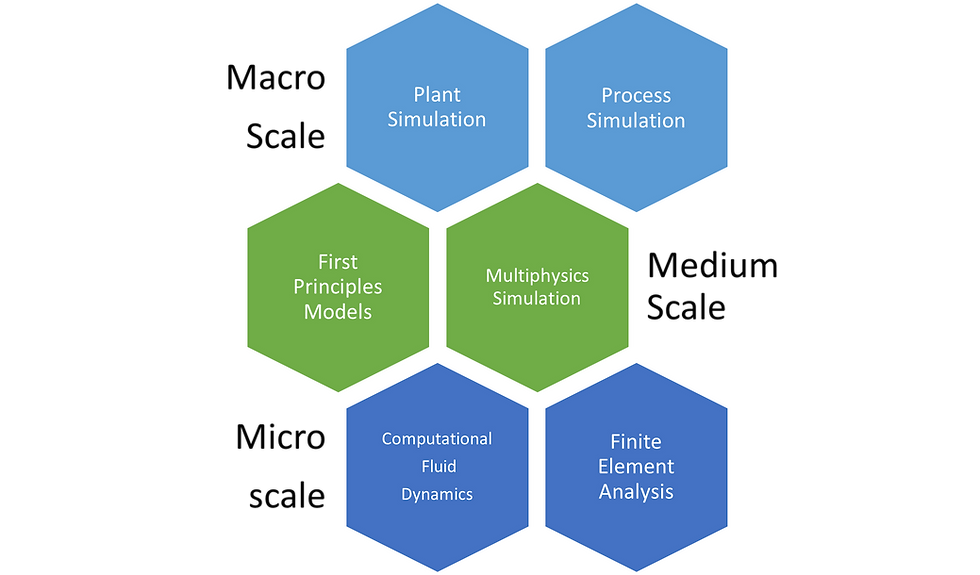

Computer simulation of webs in converting operations is possible that can provide deep insights into the material properties and process factors affecting web behavior [4]. r4. Multiphysics simulation software, chemical process simulators, structural mechanics, computational fluid dynamics and plant simulation tools are available to help define critical measurements and even to build a “digital twin” of a physical process (see Figure 3).

Processes are interactions of materials and energy, so there will be measurements needed for material properties and states as well as process variables and machine states. For web measurement, sensors exist for measuring coating thicknesses, web thicknesses and basis weights, web defects, web width and lateral position, tension, moisture and velocity. Equipment components increasingly have data available “at the edge” to enable equipment health monitoring. Digital sensors for temperature, fluid flowrate and pressure, vibration, position, moisture/humidity and force/pressure are available.

Sensors must be selected with the right discrimination power to detect meaningful changes at relevant frequencies. For example, slow degradation of equipment due to wear and tear that take place over weeks or months would require a different frequency of measurement than critical temperatures or pressures that can meaningfully change on the millisecond or second time scale. The resolution of the measurement sensor must be sufficient to record a meaningful change at the required confidence for a given sample size.

Sensors need to be placed in the process appropriately to avoid lags between sensing and control elements. Measuring the width of a web in a span that is not critical to the process will not be as effective as measuring where it matters the most, such as at a coating or slitting operation.

In general, focusing on the process conversion steps that add the most value to the raw materials being processed are where the most money is made and lost in the process. Often these key points are the processes having the most proprietary intellectual property, i.e.: the core competencies of the enterprise. Alternatively, the processes and equipment that are replicated many times (web idlers) can create recurring issues because of their scale in the operation and can have high leverage if a data solution is created. Another approach is to focus on the most obvious issues that require daily actions because solving them will garner support for more in-depth data analytics with bigger payout.

Once the critical measurements are known and the frequency and resolution defined, an additional consideration is the length of time for the data to be stored and accessible. Practically speaking, the data has to be available long enough for regulatory requirements and for the typical length of time a product is in the supply chain including product use by customers, where having the data would make quality troubleshooting feasible to prevent future defects. Cloud storage of data is easily scaled, can be overwritten as needed, and facilitates the aggregation of disparate data sources for large datasets needed to address complex problems. Data security, particularly related directly to production systems, becomes even more critical as the data generated in converting operations becomes more integrated into operations.

FIGURE 3. Modeling/simulation options for determining what to measure

Resources needed

Having selected the customer for the data insights and actions and what to measure at the right resolution and time interval to form a data model, it is important to build the team needed to execute the data collection, storage, preparation, analysis, and actions. A data engineer who can manage the systems for data collection, storage and security, a data scientist or analyst who can prepare and analyze the data, and subject-matter experts such as operators and process engineers are typical roles needed on the team, as well as a financial resource and a management sponsor. Merging deep knowledge of the process and data analytics will provide the best chance of the investment paying out. Once the team is assembled, the hard work of planning for procuring the data and doing something useful with it can begin in earnest.

Supporting the team with the necessary computing hardware and analysis software will ensure the team is not held back by a lack of data storage or computational power scaled to the data and the financial payout of the project.

Conclusion

Starting a data project by defining who has a problem worth solving and what they truly need, setting up a team with the right people and resources, and measuring, analyzing and presenting data insights effectively for the users will increase your return on investment.

In Part II of this series, Converting Process Data Wrangling, the steps of aggregating, cleaning, and exploring data from converting operations will be described as collecting data is just the first step to leveraging its value.

References

1. Leonard, Matt. “Declining price of IoT sensors means greater use in manufacturing.” Supply Chain Dive, 14 October 2019, www.supplychaindive.com/news/declining-price-iot-sensors-manufacturing/564980/.

2. Harris, Robin. “Data storage: Everything you need to know about emerging technologies.” ZDNet, 28 May 2019, www.zdnet.com/article/innovations-in-data-storage-an-executive-guide-to-emerging-technologies-and-trends/.

3. Dam, Rikke Frise and Siang, Teo Yu, “5 Stages in the Design Thinking Process.” Interaction-Design, 28 July 2020, https://www.interaction-design.org/literature/article/5-stages-in-the-design-thinking-process.

4. Carrle, Jeremy, Lange, Steve, Hamm, Rich, and Ba, Shan, “Wrinkling Mechanisms of webs with spatially varying material properties.” 2017 Proceedings of the Fourteenth International Conference on Web Handling, June 2017, https://shareok.org/handle/11244/322069.

Steve Lange is managing member of ProcessDev LLC (), a manufacturing-process development consulting company. He is a retired Research Fellow from the Procter & Gamble Co., where he spent 35 years developing web-converting processes for consumer products and as an internal trainer of web-handling, modeling/simulation and data analytics. Steve can be reached at 513-886-4538, email: stevelange@processdev.net, www.processdev.net

Comments